Hands on with GitHub Actions and Azure Stack Hub

The past few months, I’ve been working with GitHub Actions as the CI/CD platform for Azure hosted applications. Whilst that wasn’t using Azure Stack Hub, I wanted to see how I could use Actions within my environment, as I really like the low entry barrier, and GitHub is fairly ubiquitous.

From a high level, in order to achieve the integration, we need to use an Action Runner, which in simple terms is an agent running on a VM (or container) that polls for workflow action events and executes those actions on the Action runner system where the agent resides. GitHub can provide containerized runners out-of-the-box, and that’s great if you’re using a public cloud, but in the vast majority of cases, Azure Stack Hub is in a corporate network, so we can use a self-hosted runner for these scenarios, and that’s what I’ll be using here.

There are already some tutorials and videos on how you can integrate into your Azure Stack Hub environment, but I wanted to go a little deeper, from showing and explaining how to get your environment setup, to automating the creation of a self-hosted runner in your Azure Stack Hub tenant.

First, here are some links to some great content:

https://channel9.msdn.com/Shows/DevOps-Lab/GitHub-Actions-on-Azure-Stack-Hub

and the official docs:

As ever, the documentation assumes you are familiar with the platform. I won’t, so will go through step-by-step what you need to do and hopefully be successful first time!

Pre-requisites

Before you get started, here’s what you’ll need up-front:

A GitHub account and a repository. I would highly recommend a private repo, as you will be deploying a self-hosted runner which is linked to this repo - this will be able to run code in your environment. GitHub make this recommendation here.

An Azure Stack Hub environment with a Tenant Subscription that you can access (assumption here is we’re using Azure AD as the identity provider)

(Optional) A service principal with contributor rights to the Azure Stack Hub tenant subscription. If you don’t have this, I detail how this can be done later..

A GitHub Personal Access Token (PAT)

Credentials to connect to Azure Stack Hub

First thing we need is a service principal that has rights to the tenant subscription (I recommend contributor role). We will use these credentials to create a secret within our GitHub repo that we will use to connect to the subscription.

If you don’t already have an SP, following the official documentation helps us create one.

All my examples are being run from Linux and Azure CLI (Ubuntu WSL to be specific :) )

First, register your Azure Stack Hub tenant environment (if not already done so)

az cloud register \ -n "AzureStackHubTenant" \ --endpoint-resource-manager "https://management.<region>.<FQDN>" \ --suffix-storage-endpoint ".<region>.<FQDN>" \ --suffix-keyvault-dns ".vault.<region>.<FQDN>" \ --endpoint-active-directory-graph-resource-id "https://graph.windows.net/" \ --profile 2019-03-01-hybrid \

We need to connect to our Azure Stack Hub environment so that when the Service Principal is created, we can assign it to a scope.

Once the environment is defined, we need to make sure this is active.

az cloud set -n AzureStackHubTenant

Run this command to confirm that it is set correctly:

az cloud list -o table

Next, let’s connect to our subscription hosted on Azure Stack Hub.

az login

If there’s more than one subscription, you might need to specify which subscription you want to connect to.

az account set --subscription <subName>

We can see above that I have set the active subscription to ‘DannyTestSub’.

Next, we want to create our service principal. To make things easier, let’s have the CLI do the work in assigning the scope to the user subscription:

#Retrieve the subscription ID SUBID=$(az account show --query id -o tsv) az ad sp create-for-rbac --name "ASH-github-runner" --role contributor \ --scopes /subscriptions/$SUBID \ --sdk-auth

Running that should produce something like the following in my environment:

It is important that we copy the JSON output as-is , we need this exact format to create our GitHub secret. Theoretically, if you already have a clientID and secret, you could construct your own JSON formatted credential like this:

{ "clientId": "<your_ClientID>", "clientSecret": "<your_Client_secret>", "subscriptionId": "<Azure_Stack_Hub_Tenant SubscriptionID>", "tenantId": "<Your_Azure_AD_Tenant_Id>", "activeDirectoryEndpointUrl": "https://login.microsoftonline.com/", "resourceManagerEndpointUrl": "https://management.<REGION>.<FQDN>", "activeDirectoryGraphResourceId": "https://graph.windows.net/", "sqlManagementEndpointUrl": null, "galleryEndpointUrl": "https://providers.<REGION>.local:30016/", "managementEndpointUrl": "https://management.<REGION>.<FQDN>" }

Now we have the credentials, we need to set up a secret within GitHub.

From the GitHub portal, connect to your private repo that you will use for Azure Stack Hub automation.

Click on the Settings cog

Click on Secrets

Click on ‘New repository secret’

Enter a name (I’m using AZURESTACKHUB_CREDENTIALS), paste in the JSON content for the SP that were previously created, and then click on Add Secret.

You should now see your newly added credentials under Action Secrets.

So we have our credentials and have setup an Actions runner secret, now we need an Actions Runner to run our workflows against within our Azure Stack Hub environment.

If you already have a Windows Server or Linux host running in your Azure Stack Hub tenant subscription, you can follow the manual steps, per the guidance given under the Settings/ Actions / Runner config page:

You can select the OS type of the system you have running and follow the commands.

Note: The ./config.(cmd|sh) command uses a token which has a short lifetime, so be aware if you use this method and are expecting to use it for automating self-hosted runner deployments!

The above method works and is OK if you want to quickly test capabilities. However, I wanted the ability to automate the runner provisioning process, from the VM to the installation of the runner agent.

I did this by creating an ARM template that deploys an Ubuntu VM and runs a Bash script that installs necessary tools (e.g. Azure CLI, Docker, Kubectl, Helm, etc.) and most importantly, deploys the agent and dynamically retrieves a token from GitHub to add our runner. One crucial parameter we need is a GitHub Personal Access Token (PAT). We need this to authenticate to the GitHub Actions API to generate the actions token.

To create the PAT, highlight your user account from the top right of the GitHub portal:

Click Settings

Click Developer Settings

Select Personal access tokens and then Generate new token

Set the scope to repo

Scroll to the bottom and then click Generate token

Make sure to copy the PAT, as you can’t retrieve it afterwards (you could always regenerate it if needed :) )

Now we have the PAT, we can go ahead and deploy the VM using the ARM template I created.

Go ahead and get it from

https://github.com/dmc-tech/AzsHubTools/blob/main/ghRunner/template.json

There’s nothing fancy; it deploys a VNET, NIC, Public IP, VM (it uses Ubuntu 18.04 - make sure you have it available via the Azure Stack Hub Marketplace!) and then deploys a Custom script to install a bunch of tools and the runner agent. The only parameters you will need to provide are:

| Parameter | Description |

|---|---|

| gitHubPat | Personal Access Token used to access GitHub |

| gitHubRepo | GitHub Repo to create the Self Hosted Runner |

| gitHubOwner | GitHub Owner or Organisation where the repo is located for the Runner |

| adminPublicKey | Public SSH key used to login to the VM |

If you’re not sure how the Owner and Repo are derived, it’s simple:

To generate the adminPublicKey on Windows systems, I prefer to use MobaXterm. See the end of this post on how to generate the Private/ Public key

Deploy using the ARM template within your tenant subscription.

When deployed, it takes me about 10 minutes in my environment to complete.

We can see that the agent has successfully deployed by checking the Actions / Runners settings within our GitHub repo:

Success!!!

Now we can go ahead and test a workflow.

Within your repo, if it doesn’t already exist, create the following directory structure:

/.github/workflows

This is where the workflow yaml files are stored that our actions will use.

For a simple test, go ahead and copy the following into this folder in your repo (and commit it to the main branch!):

https://github.com/dmc-tech/AzsHubTools/blob/main/.github/workflows/testAzureStackHub.yml

The workflow is manually triggered ( workflow_dispatch ), and prompts for a parameter ( the name of the subscription you want to run the action against)

on:

workflow_dispatch:

inputs:

subscription:

description: 'Azure Stack Hub User subscription'

required: true

default: 'TenantSubscription'

name: Test GitHub Runner in an Azure Stack Hub environment

env:

ACTIONS_ALLOW_UNSECURE_COMMANDS: 'true'

jobs:

azurestackhub-test:

runs-on: self-hosted

steps:

- uses: actions/checkout@main

- name: Login to AzureStackHub with CLI

uses: azure/login@releases/v1

with:

creds: ${ }

environment: 'AzureStack'

enable-AzPSSession: false

- name: Run Azure CLI Script Against AzureStackHub

run: |

hostname

subId=$(az account show --subscription ${ } --query id -o tsv)

az account set --subscription ${ }

az group list --output table

You can see that the workflow refers to the secret we defined earlier: secrets.AZURESTACKHUB_CREDENTIALS

The workflow configures the Azure Stack Hub tenant environment on the runner VM (using the values from the JSON stored in the secret), connects using the service principal and secret and then runs the Azure CLI commands to list the resource groups in the specified subscription.

To run the workflow, head over to the GitHub site:

Click on Actions and then the name of the workflow we added: ‘Test GitHub Runner in an Azure Stack Hub environment’

Click on Run workflow and type in the name of your Azure Stack Hub tenant subscription (this caters for multiple subscriptions)

Hopefully you will see that the action ran successfully as denoted above. Click on the entry to check out the results.

Click on the job and you can see the output. You can see that the Azure CLI command to list the resource groups for the subscription completed and returned the results.

With that, we’ve shown how we can automate the deployment of a self-hosted runner on Azure Stack Hub and demonstrated how to run a workflow.

I really like GitHub Actions and there’s scope for some powerful automation, so although what I’ve shown is very simple, I hope you find this of use and helps you get started.

Appendix: Creating SSH keys in MobaXterm

1. Click on Tools

2. Select MobaKeyGen (SSH key generator)

1. Click on Generate

2. Copy the Public key for use with the ARM template.

3. Save the Private Key (I recommend setting a Key passphrase). You’ll need this if you need to SSH to the VM!

Managing AKS HCI Clusters from your workstation

In this article, I’m going to show you how you can manage your minty fresh AKS HCI clusters that have been deployed by PowerShell, from your Windows workstation. It will detail what you need to do to obtain the various config files required to manage the clusters, as well as the tools (kubectl and helm).

I want to run this from a system that isn’t one the HCI cluster nodes, as I wanted to test a ‘real life’ scenario. I wouldn’t want to be installing tools like helm on production HCI servers, although it’s fine for kicking the tires.

Mainly I’m going to show how I’ve automated the installation of the tools, the onboarding process for the cluster to Azure Arc, and also deploying Container Insights, so the AKS HCI clusters can be monitored.

TL;DR - jump here to get the script and what configuration steps you need to do to run it

Here’s the high-level steps:

Install the Az PoSh modules

Connect to a HCI cluster node that has the AksHCI PoSh module deployed (where you ran the AKS HCI deployment from)

Copy the kubectl binary from the HCI node to your Win 10 system

Install Chocolatey (if not already installed)

Install Helm via Choco

Get the latest Container Insights deployment script

Get the config files for all the AKS HCI clusters deployed to the HCI cluster

Onboard the cluster to Arc if not already completed

Deploy the Container Insights solution to each of the clusters

Assumptions

connectivity to the Internet.

Steps 1 - 5 of the Arc for Kubernetes onboarding have taken place and the service principal has required access to carry out the deployment. Detailed instructions are here

You have already deployed one or more AKS HCI clusters.

Install the Az PoSh Modules

We use the Az module to run some checks that the cluster has been onboarded to Arc. The enable-monitoring.ps1 script requires these modules too.

Connect to a HCI Node that has the AksHci PowerShell module deployed

I’m making the assumption that you will have already deployed your AKS HCI cluster via PowerShell, so one of the HCI cluster nodes already has the latest version of the AksHci PoSh module installed. Follow the instructions here if you need guidance.

In the script I wrote, the remote session is stored as a variable and used throughout

Copy the kubectl binary from the HCI node to your Win 10 system

I make it easy on myself by copying the kubectl binary that’s installed as part of the AKS HCI deployment on the HCI cluster node. I use the stored session details to do this. I place it in a directory called c:\wssd on my workstation as it matches the AKS HCI deployment location.

Install Chocolatey

The recommended way to install Helm on Windows is via Chocolatey, per https://helm.sh/docs/intro/install/, hence the need to install Choco. You can manually install it via https://chocolatey.org/install.ps1, but my script does it for you.

Install Helm via Choco

Once Choco is installed, we can go and grab helm by running:

choco install kubernetes-helm -yGet the latest Container Insights deployment script

Microsoft have provided a PowerShell script to enable monitoring of Arc managed K8s clusters here.

Full documentation on the steps are here.

Get the config files for all the AKS HCI clusters deployed to the HCI cluster

This is where we use the AksHci module to obtain the config files for the clusters we have deployed. First, we get a list of all the deployed AKS HCI clusters with this command:

get-akshciclusterThen we iterate through those objects and get the config file so we can connect to the Kubernetes cluster using kubectl. Here’s the command:

get-akshcicredential -clustername $AksHciClusternameOnboard the cluster to Arc if not already completed

First, we check to see if the cluster is already onboarded to Arc. We construct the resource Id and then use the Get-AzResource command to check. If the resource doesn’t exist, then we use the Install-AksHciArcOnboarding cmdlet to get the cluster onboarded to our desired subscription, region and resource group.

$aksHciCluster = $aksCluster.Name

$azureArcClusterResourceId = "/subscriptions/$subscriptionId/resourceGroups/$resourceGroup/providers/Microsoft.Kubernetes/connectedClusters/$aksHciCluster"

#Onboard the cluster to Arc

$AzureArcClusterResource = Get-AzResource -ResourceId $azureArcClusterResourceId

if ($null -eq $AzureArcClusterResource) {

Invoke-Command -Session $session -ScriptBlock { Install-AksHciArcOnboarding -clustername $using:aksHciCluster -location $using:location -tenantId $using:tenant -subscriptionId $using:subscriptionId -resourceGroup $using:resourceGroup -clientId $using:appId -clientSecret $using:password }

# Wait until the onboarding has completed...

. $kubectl logs job/azure-arc-onboarding -n azure-arc-onboarding --follow

}Deploy the Container Insights solution to each of the clusters

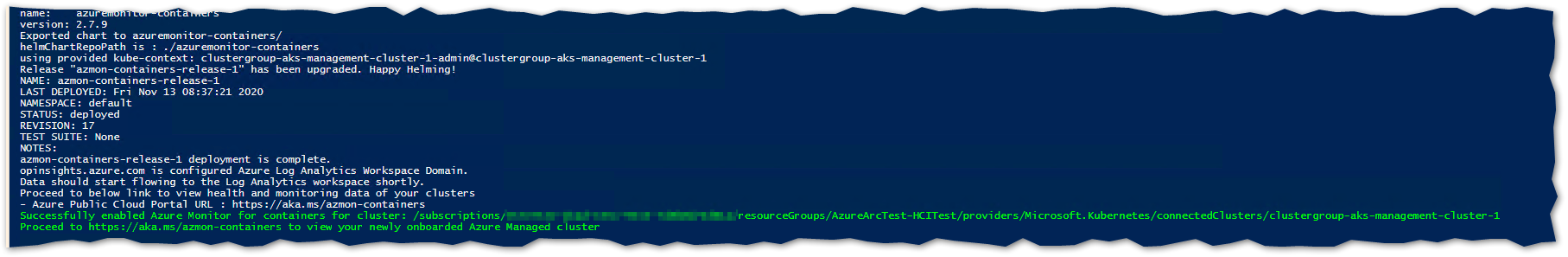

Finally, we use the enable-monitoring.ps1 script with the necessary parameters to deploy the Container Insights solution to the Kubernetes cluster.

NOTE

At the time of developing the script, I found that I had to edit the veriosn of enable-monitoring.ps1 that was downloaded, as the helm chart version defined (2.7.8) was not available. I changed this to 2.7.7 and it worked.

The current version of the script script on GitHub is now set to 2.7.9, which works.

If you do find there are issues, it is worth trying a previous version, as I did.

You want to look for where the variable $mcrChartVersion is set (line 63 in the version I downloaded) and change to:

$mcrChartVersion = "2.7.7"Putting It Together: The Script

With the high level steps described, go grab the script.

You’ll need to modify it once downloaded to match your environment. The variables you need to modify are listed below and are at the beginning of the script. (I didn’t get around to parameterizing it; go stand in the corner, Danny! :) )

$hcinode = '<hci-server-name>'

$resourceGroup = "<Your Arc Resource Group>"

$location = "<Region of resource>"

$subscriptionId = "<Azure Subscription ID>"

$appId = "<App ID of Service Principal>"

$password = "<Ap ID Secret>"

$tenant = "<Tenant ID for Service Principal>"Hopefully it’s clear enough that you’ll need to have created a Service Principal in your Azure Sub, providing the App Id, Secret and Tenant Id. You also need to provide the Subscription of the Azure Sub you are connecting Arc to as well as the Resource Group name. If you’re manually creating a Service principal, make sure it has rights to the Resource Group (e.g. Contributor)

Reminder

Follow Steps 1 - 5 in the following doc to ensure the pre-reqs for Arc onboarding are in place. https://docs.microsoft.com/en-us/azure-stack/aks-hci/connect-to-arc

When the script is run, it will retrieve all the AKS HCI clusters you have deployed and check they are onboarded to Arc. If not , it will go ahead and do that. Then it will retrieve the kubeconfig file, store it locally and add the path to the file to the KUBECONFIG environment variable. Lastly, it will deploy the Container Insights monitoring solution.

Here is an example of the Arc onboarding logs

and here is confirmation of successful deployment of the Container Insights for Containers solution to the cluster.

What you will see in the Azure Portal for Arc managed K8s clusters:

Before onboarding my AKS HCI clusters…

..and after

Here’s an example of what you will see in the Azure Portal when the Container Insights solution is deployed to the cluster, lots of great insights and information are surfaced:

On my local system, I can administer my clusters now using Kubectl and Helm. Here’s an example that shows that I have multiple clusters in my config and has specific contexts :

The config is derived from the KUBECTL environment variable. Note how the config files I retrieved are explicitly stated:

I’m sure that as AKS HCI matures, more elegant solutions to enable remote management and monitoring will be available, but in the meantime, I’m pretty pleased that I achieved what I set out to do.

Rotating Event Hubs RP External Certificate on Azure Stack Hub

I have been testing the Event Hubs public preview release for Azure Stack Hub, looking at the install process and what kind of actions an Operator would need to do to keep things running. One of the important ones for me, are rotating secrets / certificates. If your certificates expire, you won’t be able to access the RP, hence the importance.

If you check the current documentation for rotating secrets , it is the generic instructions for rotating external certificates for the Azure Stack Hub Stamp. I expect that this will be corrected in the near future, but until then , how do you do go about it for the Public Preview?

Firstly, you need the latest version of the Azure Stack Hub PowerShell modules:

Then you need to connect to your Azure Stack Hub Admin environment. Use the steps detailed in the following article : https://docs.microsoft.com/en-us/azure-stack/operator/azure-stack-powershell-configure-admin?view=azs-2002

(Remember if using the Az Module to rename the Commands per https://docs.microsoft.com/en-us/azure-stack/operator/powershell-install-az-module?view=azs-2002#7-use-the-az-module)

Copy the Event Hubs pfx file to a local directory and run the following script (The example is using theAz module)t:

$ProductId = 'microsoft.eventhub'

$productVersion = (Get-AzsProductDeployment -ProductId microsoft.eventhub).properties.deployment.version

$PackageId = ('{0}.{1}' -f $ProductId, $productVersion)

$packageSecret = ((Get-AzsProductSecret -PackageId $PackageId).value.name).split('/')[2]

$certPath = 'C:\AzsCerts\EventHubs\cert.pfx'

$pfxPassword = (ConvertTo-SecureString '<pfxPassword>' -AsPlainText -Force)

Set-AzsProductSecret -PackageId $PackageId -SecretName $packageSecret -PfxFileName $certPath -PfxPassword $pfxPassword -Force -Verbose

Invoke-AzsProductRotateSecretsAction -ProductId $ProductId

Modify the $certPath variable and <pfxPassword> to match what you have set and then run the script.

The process will take quite a long time to complete. Whilst the operation is taking place, you will receive the status of the command.

If you choose to stop the CmdLet/script, the process will continue in the background. You can check the status at anytime by running the following:

(Get-AzsProductDeployment -ProductId microsoft.eventhub).propertiesYou should see something like this when the process is still running:

… and when successfully finished:

Hope that helps until the official documentation is released!

Article updated 16 July 2020 with an updated method to obtain the secret name, provided by @kongou_ae - Thanks!

Generate Azure Stack Hub Certificates using an Enterprise CA - the automated way

Automate your Azure Stack Hub Certificates signed by a Windows Enterprise CA with these scripts.

Anyone who has had to deploy or operate Azure Stack Hub will tell you that one of the most laborious and tricky tasks is the generation of PKI certificates for the external endpoints. There are quite stringent requirements that can be found here.

I have already written about and created a script that can be used to generate Lets Encrypt signed certificates, but that doesn’t meet everyone’s requirements.

A common scenario is to use a Certificate Authority hosted on Windows Server. Here are the high level tasks that need to be carried out:

Generate the requests

Submit the request to the CA

Approve the request

Retrieve the signed cert

Import the signed cert

Export the certificate as a Pfx file with password

Place the exported Pfx files into specific folders for use by Azure Stack Hub

Test the certs for validity

Microsoft have helped by creating the Azure Stack Readiness Checker PowerShell module, which really is a great tool for generating the certificate requests and checking the validity of the Pfx files, but we need a process to automate all of the steps above.

To help with that, I’ve created a script that will take some inputs and do the rest for you. You can find it here.

The main script is New-AzsHubCertificates.ps1. It takes the following parameters:

| Parameter | Type | Description |

|---|---|---|

| azsregion | String | Azure Stack Hub Region Name |

| azsCertDir | String | Directory to store certificates |

| CaServer | String | IP address fo the Certificate Authority Server |

| IdentitySystem | String | Either AAD or ADFS |

| CaCredential | Credential | Credentials to connect to the Certificate Authority Server |

| AppServer | Boolean | Choose if App Service Certs should be generated |

| DBAdapter | Boolean | Choose if DB Adapter Cert should be generated |

| EventHubs | Boolean | Choose if Event Hubs Cert should be generated |

| IoTHubs | Boolean | Choose if IOT Hub Cert should be generated |

| SkipDeployment | Boolean | Choose if you do not require the deployment certificates generating |

| pfxPassword | SecureString | Password for the Pfx Files |

It utilizes the Azure Stack Readiness Checker module to generate the cert requests and to also validate that the certificates are fit for purpose, so ensure that you have the module installed. The script also needs to be run from an elevated session as it needs to import and export certificates from the Computer store that you run it from (needless to say you need to run it from a Windows client :) ).

The first thing the script does is create the folder for the certificates. It takes the input you specify and will create a sub-folder from there for the Azure Stack Hub Region, so you could run this script on the same system for multiple stamps.

Next, it uses the New-AzsCertificateSigningRequest function from the Readiness checker module to create the requests. it places them in the \requests directory.

After this, WinRM config is checked to see if the IP address of the CA server exists in Trusted Hosts. If not, it is added. All other entries are maintained.

A Session is established to the CA server, and the request files are copied to it.

On the CA server, Certutil.exe is used to retrieve the Public CA certificate and stored in a file.

On the CA server, CertReq.exe is used to submit each of the requests to the CA.

On the CA server, each request is approved using CertUtil -resubmit .

On the CA server, each Signed cert is retrieved and stored as a p7b and crt file.

Each signed cert is copied from the CA server to the local system where you’re running the script.

The Public CA certificate is copied from the CA server to the local system.

On the CA server, the working folder is deleted.

The remote PS Session is removed.

The Public CA certificate is imported in to the computers root store.

The directory structure required for validating certificates is created.

The signed certificates are imported.

The private certificates are exported and saved as a pfx file with password to the corresponding directory.

The certificates are validated using the Invoke-AzsCertificateValidation function from the Readiness checker module.

The folder structure for the certificates is as follows:

C:\AZSCERTS\AZS1

+---AAD

| +---ACSBlob

| | blob.pfx

| |

| +---ACSQueue

| | queue.pfx

| |

| +---ACSTable

| | table.pfx

| |

| +---Admin Extension Host

| | adminhosting.pfx

| |

| +---Admin Portal

| | adminportal.pfx

| |

| +---ARM Admin

| | adminmanagement.pfx

| |

| +---ARM Public

| | management.pfx

| |

| +---KeyVault

| | vault.pfx

| |

| +---KeyVaultInternal

| | adminvault.pfx

| |

| +---Public Extension Host

| | hosting.pfx

| |

| \---Public Portal

| portal.pfx

|

+---AppServices

| +---API

| | api.pfx

| |

| +---DefaultDomain

| | wappsvc.pfx

| |

| +---Identity

| | sso.pfx

| |

| \---Publishing

| ftp.pfx

|

+---DBAdapter

| DBAdapter.pfx

|

+---EventHubs

| eventhub.pfx

|

\---IoTHub

mgmtiothub.pfx

I have written an example script new-AzsHubcertificatesexample.ps1 so that you can generate the correct parameter types, or create a new, unique Pfx password for use by the main script. Change the variables according to your environment. You could take this further by storing the pfx password in a KeyVault; maybe I’ll write a further post on how to do this … :)

Azure Stack Hub: When you can't view/add permissions in a tenant subscription from the portal

I have noticed on a few occasions that for a tenant subscription hosted in an Azure Stack region that I am either unable to view the IAM permissions, or add a user/service principal/group for the subscription. I have needed to do this to assign a service principal as a contributor to a subscription so that I can deploy a Kubernetes cluster via AKSE.

Typically for viewing the permissions, clicking on the Refresh button does the trick. More problematic is adding permissions via the portal. Doing so renders a screen like below:

As highlighted, the animated ‘dots’ show the blade constantly trying to retrieve the data but can’t. It is actually a known issue and is highlighted in the release notes.

The remediation it offers is to use PowerShell to verify the permissions, and gives a link to the Get-AzureRmRoleAssignment CmdLet. Not helpful if you want to set permissions, so here’s a step-by-step description of what you’ll need to do.

Pre-Reqs:

Your user account is the owner of the subscriptions when they were set up.

You have the correct PowerShell for Azure Stack Hub installed

For the example shown, I am using Azure AD identities, I have more than one tenant subscription assigned to my user account and I am adding a service principal. At the end of the post, I will show the commands for adding a group or a user .

Step-By-Step

From PowerShell, connect to the tenant subscription you want to change the permissions on. The documentation is for connecting as an operator, so here’s how to do it as a user:

$Region = '<yourRegion>'

$FQDN = '<yourFQDN>'

$AADTenantName = '<yourAADTenantName>'

$envName = "AzureStack$region"

# Register an Azure Resource Manager environment that targets your Azure Stack instance. Get your Azure Resource Manager endpoint value from your service provider.

Add-AzureRMEnvironment -Name $envName -ArmEndpoint "https://management.$Region.$FQDN" `

-AzureKeyVaultDnsSuffix vault.$Region.$FQDN `

-AzureKeyVaultServiceEndpointResourceId https://vault.$Region.$FQDN

# Set your tenant name.

$AuthEndpoint = (Get-AzureRmEnvironment -Name $envName).ActiveDirectoryAuthority.TrimEnd('/')

$TenantId = (invoke-restmethod "$($AuthEndpoint)/$($AADTenantName)/.well-known/openid-configuration").issuer.TrimEnd('/').Split('/')[-1]

# After signing in to your environment, Azure Stack cmdlets

# can be easily targeted at your Azure Stack instance.

Add-AzureRmAccount -EnvironmentName $envName -TenantId $TenantIdAs I have more than one subscription, let’s get a list so we can select the correct one:

Get-AzureRmSubscription | ft NameI got the following output:

I want to modify the AKSTest subscription:

#Set the context

$ctx = set-azurermcontext -Subscription AKSTest

$ctxRunning that command, I get :

Cool; I’ve got the correct subscription now, so let’s list the permissions assigned to it:

Get-AzureRmRoleAssignmentOK, so those are the standard permissions assigned when the subscription is created. Let’s add a service principal.

First, let’s get the service principal object

Get-AzureRmADServicePrincipal | ? {$_.DisplayName -like '*something*'} |ft DisplayNameI want to assign AKSEngineDemo

$spn = Get-AzureRmADServicePrincipal -SearchString AKSEngineDemoLets assign the service principal to the tenant subscription:

New-AzureRmRoleAssignment -ObjectId $spn.Id -RoleDefinitionName Contributor -scope "/subscriptions/$($ctx.Subscription.Id)"When I take a look in the portal now (after doing a refresh :) ), I see the following:

Here’s the commands for adding an Azure AD User:

#Find the User object you want to add

Get-AzureRmADUser | ? {$_.DisplayName -like '*something*'} |ft DisplayName

#Assign the object using the displayname of the user

$ADUser = Get-AzureRmADUser -SearchString <UserName>

New-AzureRmRoleAssignment -ObjectId $ADUser.Id -RoleDefinitionName Contributor -scope "/subscriptions/$($ctx.Subscription.Id)"Finally, how to add an Azure AD Group:

#Find the Group object you want to add

Get-AzureRmADGroup | ? {$_.DisplayName -like '*something*'} |ft DisplayName

#Assign the object using the displayname of the user

$ADGroup = Get-AzureRmADGroup -SearchString <GroupName>

New-AzureRmRoleAssignment -ObjectId $ADGroup.Id -RoleDefinitionName Contributor -scope "/subscriptions/$($ctx.Subscription.Id)"Topic Search

-

Securing TLS in WAC (Windows Admin Center) https://t.co/klDc7J7R4G

Posts by Date

- March 2025 1

- February 2025 1

- October 2024 1

- August 2024 1

- July 2024 1

- October 2023 1

- September 2023 1

- August 2023 3

- July 2023 1

- June 2023 2

- May 2023 1

- February 2023 3

- January 2023 1

- December 2022 1

- November 2022 3

- October 2022 7

- September 2022 2

- August 2022 4

- July 2022 1

- February 2022 2

- January 2022 1

- October 2021 1

- June 2021 2

- February 2021 1

- December 2020 2

- November 2020 2

- October 2020 1

- September 2020 1

- August 2020 1

- June 2020 1

- May 2020 2

- March 2020 1

- January 2020 2

- December 2019 2

- November 2019 1

- October 2019 7

- June 2019 2

- March 2019 2

- February 2019 1

- December 2018 3

- November 2018 1

- October 2018 4

- September 2018 6

- August 2018 1

- June 2018 1

- April 2018 2

- March 2018 1

- February 2018 3

- January 2018 2

- August 2017 5

- June 2017 2

- May 2017 3

- March 2017 4

- February 2017 4

- December 2016 1

- November 2016 3

- October 2016 3

- September 2016 5

- August 2016 11

- July 2016 13