Interesting changes to Arc Agent 1.34 with expanded detected properties

Microsoft just pushed out a change in Azure Arc Connected Agent 1.34 and with this comes some enrichment of Hybrid Servers detected properties.

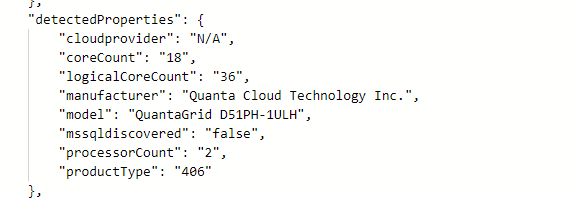

This is what the properties looked like prior to the update.

Agent 1.33 and earlier

Okay… so what’s new and different?

New detected properties for Azure Arc Connected Agent 1.34

serialNumber, ProcessNames and totalPhysicalMemory

resources

| where ['type'] == "microsoft.hybridcompute/machines"

| extend processorCount = properties.detectedProperties.processorCount,

serialNumber = properties.detectedProperties.serialNumber,

manufacturer= properties.detectedProperties.manufacturer,

processorNames= properties.detectedProperties.processorNames,

logicalCoreCount = properties.detectedProperties.logicalCoreCount,

smbiosAssetTag = properties.detectedProperties.smbiosAssetTag,

totalPhysicalMemoryInBytes = properties.detectedProperties.totalPhysicalMemoryInBytes,

totalPhysicalMemoryInGigabytes = properties.detectedProperties.totalPhysicalMemoryInGigabytes

| project name,serialNumber,logicalCoreCount,manufacturer,processorCount,processorNames,totalPhysicalMemoryInBytes,totalPhysicalMemoryInGigabytes

This unlocks organizations to collect processor, serial number and memory information in a simple fashion via Azure Arc infrastructure. This can be used to look at things like consolidation and migration planning, perhaps decommissioning aging hardware even warranty lookup if you don’t have current hardware CMDB.

Arc SQL Extension - Best Practices Assessment

A look into Azure Arc SQL Extension and how best practices assessment and what it can extract from an Azure Arc enabled SQL Server.

Azure Arc help increase the visibility of your IT estate outside of Azure. Layering on top of the SQL Extension, we can bring a centralized view of your SQL Servers and databases, and now other control features help with management activities.

Let’s take a look at the Best practices assessment (BPA)

First thing to know is “Best practices assessment is only available for SQL Server with Software Assurance, SQL subscription, or with Azure pay-as-you-go billing. Update the license type appropriately. Learn more”

If you want to look at you’re overall licenses we can run a query against the Azure Resource Graph. While we are at it lets add it to a dashboard.

resources

| extend SQLversion = properties.version

| extend SQLEdition = properties.edition

| extend licensetype = properties.licenseType

| where type == ("microsoft.azurearcdata/sqlserverinstances")

| project id, name,SQLversion,SQLEdition, licensetypeNote: as of writing this, the property is ‘lincetype’ not ‘licensetype’ this will likely be corrected.

there are some minor edits to the query in this image, added ID and selected ‘formatted results’ so the record is linked.

We can now see the query on the dashboard and see the license types for the servers. We have a ‘Paid’ for sv5-su5-6320018 so we can configure BPA for both instances.

You can change the SQL Server Registration to automatically handle the licensing selection

To enable an assessment pick a Log Analytics Workspace, click “Enable assessment”

The prerequisites are listed here. One to make sure you have completed is.

The SQL Server built-in login NT AUTHORITY\SYSTEM must be the member of SQL Server sysadmin server role for all the SQL Server instances running on the machine.

We can see what resource the deployment creates.

checking the data collection rule

We can see the data source is the local files is checking for CSVs here

C:\Windows\System32\config\systemprofile\AppData\Local\Microsoft SQL Server Extension Agent\Assessment\*.csv

and uploading them into the table SqlAssessment_CL in the LA Workspace instance. We can see the table

Depending on your purpose you may want to consider changing the table settings.

Depending on the size of the servers and the number of databases it might take a while to run. The default schedule is weekly but adjustable.

Once its completes you will be able to explore the results.

You can look at the query that is integrating the SqlAssessment_CL table. The data is being dumped into a single RawData field that needs to be parsed. This query will help you get a jump start on accessing the data.

There is a to explore with the Azure Arc SQL Extension and various ways to use the platform to provide more visibility and control over your SQL servers.

Installing AKS Edge Essentials public preview

UPDATE: Check out this later post for lessons learnt and some configuration information that you will want to use https://www.cryingcloud.com/blog/2023/2/3/aks-edge-essentials-diving-deeper

Microsoft announced the Public preview of AKS Edge Essentials (I’m going to abbreviate to AKE-EE!) a few months ago and I wanted to try it out. I think it's a great idea to be able to run and manage containerized workloads hosted on smaller, but capable compute systems such as Intel NUC's, a Windows desktop/laptop etc. By using Azure Arc to manage this ecosystem is quite a compelling prospect.

https://learn.microsoft.com/en-gb/azure/aks/hybrid/aks-edge-overview

To try it out, I looked through the public preview documentation, using my Windows 11 laptop to run AKS-EE. The doc's are pretty good, but make some assumptions, or miss some steps, especially if you're coming to this cold. I decided to use K8s, rather than K3s, so this blog is geared towards that.

There is a recently published Azure Arc jumpstart scenario which creates a VM in Azure and does the same (but automated!).

Prep your machine

https://learn.microsoft.com/en-gb/azure/aks/hybrid/aks-edge-howto-setup-machine

First of all, you need a system running Windows 10/11 or Windows Server 2019/2022 I chose my laptop as it is capable, but you could use an Azure VM running Windows server 2019/2022.

I'm using VS Code, so I'd recommend installing that if you haven't already done so. You will also need your own GitHub account, as you will need to clone the AKS Edge project into your own repo.v

If Hyper-V isn't already enabled, you can either do this manually, or let the AKS Edge setup script do this for you. Check it's enabled (from an elevated PowerShell prompt):

Get-WindowsOptionalFeature -Online -FeatureName *hyper*If you need to install it (from an elevated PowerShell prompt):

Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Hyper-V -AllThe docs say to disable power standby settings. If you’re deploying AKS-EE in a remote location, you’ll want to keep the system running 24/7, so this makes sense. For a kick the tires scenario, you probably don’t need to do this.

To check the current power settings of your system, run:

powercfg /aYou can see above, that I’ve already disabled the power standby settings. If any of them are enabled, run the following commands:

powercfg /x -standby-timeout-ac 0

powercfg /x -standby-timeout-dc 0

powercfg /hibernate off

reg add HKLM\System\CurrentControlSet\Control\Power /v PlatformAoAcOverride /t REG_DWORD /d 0If power standby settings were enabled, reboot your system and check they’ve been disabled.

Installing AKS-Edge Essentials tools

Once the machine prep is complete, go ahead and download the AKS-Edge K8s tool from https://aka.ms/aks-edge/k8s-msi

It’s an MSI file, so go ahead and install on your system however you prefer (next, next, next… ;) ).

Once that’s done, we need to clone the AKS-EE repo to the local system. I’ve done this via VS Code.

Open the Source Control blade

Click on Clone Repository

Paste the url https://github.com/Azure/AKS-Edge.git

Click on the Clone from URL option

Select a folder from window that pops up of where you want to store the repo on your local system.

In my example, I cloned to a directory called C:\repos

A message will pop up in VS Code stating that the repo is cloning.

Click Open to display folder in VS Code

Open a PowerShell terminal within VS Code, and navigate to the tools directory from the repo you’ve just cloned.

from the PowerShell terminal window, run the following command:

.\AKSEdgePrompt.cmdIt will open a new PowerShell window as Admin, check Hyper-V is enabled and AKS Edge Essentials for K8s is installed. Remember to run further commands in this newly instantiated PowerShell window.

Within this window, you can check that the AKSEdge module has been imported.

Get-Command -Module AKSEdge | Format-Table Name, VersionDeploying the cluster

I use the term cluster loosely, as I’m running this from my laptop, but you get my meaning.

Given this, I need to edit the config to reflect a single machine scenario.

From VS Code:

Open the aksedge-config.json file in the tools directory.

As I'm using K8s, edit the NetworkPlugin parameter to use calico

"NetworkPlugin": "calico",You need to select a spare IP on your local network for the VM that’s provisioned. My home router uses 192.168.1.0/24, so I selected a free address not in the DHCP lease range.

Select another free IP on your network for the API end point

…and now enter the start and end range for the kubernetes services

If you’re interested, you can check out the config options here and further descriptionm on what each setting does here: https://learn.microsoft.com/en-us/azure/aks/hybrid/aks-edge-deployment-config-json

Once you’ve saved the config file, go ahead to the PowerShell window that you opened via the AKSEdgePrompt.cmd and run the following to start the deployment (assuming you are still in the tools directory in the repo):

New-AksEdgeDeployment -JsonConfigFilePath .\aksedge-config.jsonIf everything is in place, that should start the deployment

When prompted, choose whether you want to send optional or only required diagnostics data.

.. eventually it will complete…

Let’s prove we can use kubectl to talk to the API and get some info

kubectl get nodes -o wide

kubectl get pods -A -o wide

Cool, the base cluster is deployed :)

Something to be aware of:

In the repo’s tools directory, a file will be created called

servicetoken.txt.Take care of this file as it is a highly-privileged token used to admin the K8s cluster. Make sure not to commit the file into your repo. You will need this token for certain activities later on.

Connecting AKS Edge Essentials to Azure Arc

The provided repo has some nice tools to help you get connected to Azure Arc, but we need to get some configuration information prior to running the scripts. To make it easy, here are some of the az cli commands to get what you need.

az login

#optional - run if you have multiple subscriptions and want to select which one to use

az account set --subscription '<name of the subscription>'

#SubscriptionId

az account show --query id -o tsv

#TenantId

az account show --query tenantId -o tsvedit the aide-userconfig.json file from the tools directory

Change the following parameters using the values captured

"SubscriptionName": "<Azure Subscription Name>" "SubscriptionId": "<SubscriptionId>", "TenantId": "<TenantId>",

There’s no indication inn the docs whether to change the AKSEdgeProduct from K3s to K8s. I did - ‘just in case’

"AksEdgeProduct": "AKS Edge Essentials - K8s (Public Preview)"

Go ahead and name your resource group, service principal and region to deploy to. If the resource group and service principal do not exist, the deployment routine will create them for you. In the case of the service principal, it will assign contributor rights to the resource group scope.

Once the config file is saved, we’re ready to setup Arc.

From the PowerShell window, I decided to move to the root of the repo (not tools as previous), and ran the following command to setup the resource group, service principal permissions and role assignment:

.\tools\scripts\AksEdgeAzureSetup\AksEdgeAzureSetup.ps1 .\tools\aide-userconfig.json -spContributorRoleA warning is shown that the output includes credentials that you must protect. If you open the

aide-userconfig.jsonyou’ll notice it is populated with a service principal id and corresponding password created when the script was run.

If you want to test the credentials, run the following:

.\tools\scripts\AksEdgeAzureSetup\AksEdgeAzureSetup-Test.ps1 .\tools\aide-userconfig.jsonTo test the user config prior to initialization:

Read-AideUserConfig

Get-AideUserConfigTime to initialize…

Initialize-AideArc…and now to connect…

Connect-AideArcOnce complete, we can check the Azure portal to see that that the Arc resources are present in the resource group I specified in the config file. There will be one entry for ‘Server’ (my laptop) and another for Kubernetes

Select the Kubernetes - Azure Arc resource and then select ‘Namespaces’.

We need to paste the service token that is created when Connect-AideArc is run. This is located in .\tools\servicetoken.txt

Paste it in the Service account bearer token field.

You’ll now be able to query resources in your cluster.

All in all, the tools and scripts developed for AKS Edge Essentials work really well and I didn’t come across any issues. It bodes well for when it moves to GA.

Next steps for me are to investigate further scenarios and see what I can do on the platform.

Topic Search

-

Securing TLS in WAC (Windows Admin Center) https://t.co/klDc7J7R4G

Posts by Date

- March 2025 1

- February 2025 1

- October 2024 1

- August 2024 1

- July 2024 1

- October 2023 1

- September 2023 1

- August 2023 3

- July 2023 1

- June 2023 2

- May 2023 1

- February 2023 3

- January 2023 1

- December 2022 1

- November 2022 3

- October 2022 7

- September 2022 2

- August 2022 4

- July 2022 1

- February 2022 2

- January 2022 1

- October 2021 1

- June 2021 2

- February 2021 1

- December 2020 2

- November 2020 2

- October 2020 1

- September 2020 1

- August 2020 1

- June 2020 1

- May 2020 2

- March 2020 1

- January 2020 2

- December 2019 2

- November 2019 1

- October 2019 7

- June 2019 2

- March 2019 2

- February 2019 1

- December 2018 3

- November 2018 1

- October 2018 4

- September 2018 6

- August 2018 1

- June 2018 1

- April 2018 2

- March 2018 1

- February 2018 3

- January 2018 2

- August 2017 5

- June 2017 2

- May 2017 3

- March 2017 4

- February 2017 4

- December 2016 1

- November 2016 3

- October 2016 3

- September 2016 5

- August 2016 11

- July 2016 13